What do YOU mean by open source AI?

How OSPOs can drive alignment around meaning for openness and AI

Ana Jiménez Santamaría’s blog post How Open Source Program Offices Make OSS and AI Work did a great job of speaking to to the ways in which OSPOs can help organizations navigate AI risks. I also believe OSPOs also have a critical role to play in challenging their organizations to get the story straight on what they mean by “open source AI".

I don't think that means what you think it means...

This is not to enter the debate around "what IS open source AI", but instead to highlight lack of ecosystem clarity in answering this question: what does your organization actually MEAN when they say open source AI? What can your users and contributors trust to be true when they use and contribute to your products and projects?

Here are just a few ways open source AI terminology is being used - sometimes at odds with each other.

- Government framing: “We need to ensure America has leading open models founded on American values.” (White House AI action plan)

- Industry marketing: “Llama 3.1… the first frontier-level open source AI model.” (Meta Llama, which has a mixed license - announcement)

- Foundation proposals: “Models purported as ‘open source’ frequently employ bespoke licenses with ambiguous terms.” (Linux Foundation, Model Openness Framework)

- Free Software vision: “Free ML requires software, raw training data, and scripts to grant users the four freedoms.” (FSF is working on a freedom and machine learning definition)

- Policy application: “The EU AI Act does not include a definition of ‘open source.’” (Assessment of EU AI act on open source)

- Weights: “The product must include the weights and allow distribution of the weights…” (Open Weights definition)

- Public AI: “A vision for a robust ecosystem of initiatives that promote public goods, public orientation, and public use throughout every step of AI development and deployment.” (Mozilla on Public AI)

- Coding Assistant Confusion: "Llama 2, Mistral, Mixtral, Mistral-7B, Falcon, GPT-NeoX, GPT-J, Stable Diffusion, Whisper, BLOOM, T5, BERT, DistilBERT" GitHub Copilot with Claude 4 proving me with a list of open source AI (which are not all the same).

Opportunity leadership and creativity in OSPOs

This is both a creative opportunity for ecosystem leadership, and I would say, a key responsibility for OSPOs right now - to help their organizations stand out by declaring that they are trustworthy, and open in the ways that matter to users.

- Clarify position: define what YOU mean by “open source AI” and variations that may be true in certain use-cases. Maybe data cannot be open, be clear on the criteria that influences such a decision (safety, privacy etc).

- Educate internally: In the race to 'win' don't take on cultural debt, teach employees about open beyond licensing. Align and build culture that reflects a commitment in community - as we have for open source for years.

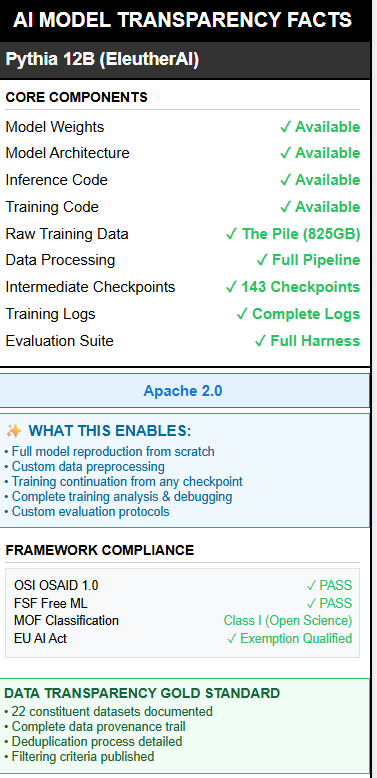

- Innovate user documentation: experiment with new ways of detailing 'openness' in a product or project that summarize 'complexity of ingredients' in simple ways anyone can understand, trust or challenge (cereal label for inspiration below).

- Build trust: In the race to 'win', don't take on reputational debt, create processes and practices that developers and users can publicly verify. Get feedback from engineering teams, and users alike - and act on it. Update your open source @ company name website with all of this nice alignment - other organizations will follow.

No one has the full picture yet, but that's precisely why OSPOs matter: they can connect dots, create organizational clarity, and provide leadership in a space where trust is currently scarce.

NOTE: If at any point, you've read this and though "Emma doesn't know x about this particular thing", you are probably right and that's kind of the point here. Its very hard to gather the big picture.

Available for consulting sessions, or sponsor me on GitHub to support writing like this.