The best time to create a moderation plan is before launching your OSS project. The second best time is now.

Moderation is the heart of open source capability - the success of which drives critical outcomes for security, sustainability and innovation. The lack of which can cause significant harm to projects and people.

The best time to plant a tree was 20 years ago. The second best time is now - Chinese proverb

The ash covered hero is more celebrated than the fire inspector - forgotten source

Moderation impacts everything

Over the years, I've helped countless maintainers and communities looking for better ways of moderating difficult content in their open projects. Many having gradually accepted the management of personal attacks, toxic behavior, spam (including pornographic content) as part of running an open project - developing stoic tolerance for this constant burden on their time, and emotions.

The XZ breach succeeded through social engineering, taking advantage of maintainer burnout. Maintainers drowning in spam and AI slop may be slower to respond to security updates making that project more vulnerable. Creeping normality of toxic environments discourage and silence diverse voices that drive innovation.

I'm usually called in after problems (fires) explode; when tolerance threshold is finally breached . The ash covered heros.

This is the moment to get serious about moderation planning

The AI technology moment makes moderation planning urgent. AI can both enable complex social engineering attacks like XZ and flood projects with increasingly sophisticated generated content. As diversity and inclusion efforts are challenged, and sometimes erased its possible and even likely that harmful behaviors become even more normalized across the industry. Intention matters.

Co-build and collaborate

Organizations of all sizes releasing open source should have plans for supporting their employees should they encounter difficulties in moderating open spaces.

Anyone in a leadership role in an open source project (maintainer, community manager, leadership) should have a moderation plan and these should be created (where possible) with the community. I wrote this "moderation pocket book" to help.

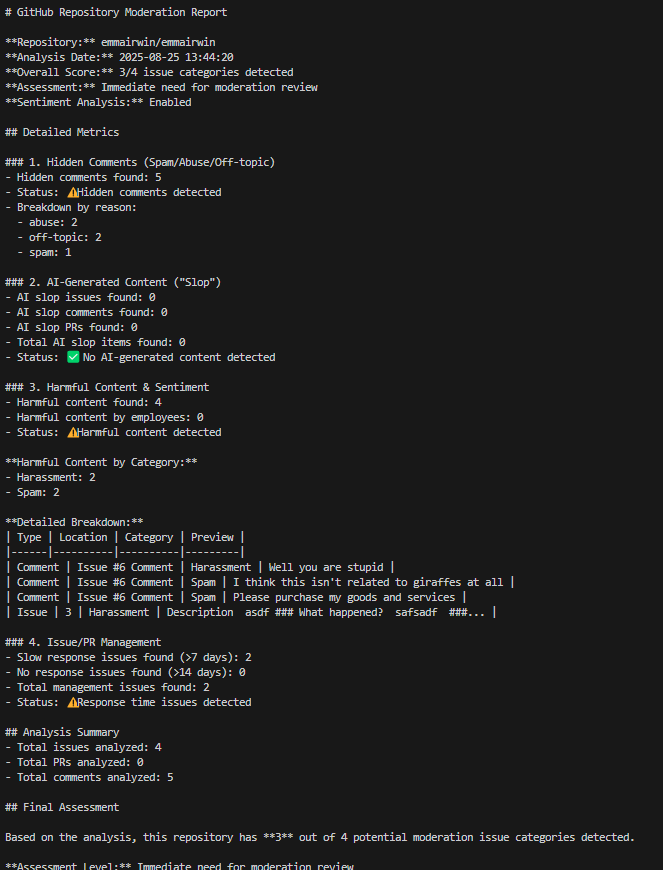

Everyone should understand the baseline of their projects moderation health. I created this quick proof-of-concept tool for what that might look like, it reports on a number of moderation risk factors.

In this report, you can see my test repo has some toxic behavior (none from admins), spam but no AI slop, and slow issue response times. This suggests I should investigate why toxic content gets through and find resources to improve response times - ultimately updating my moderation plan to reflect new goals.

Moderation planning is a team sport

Moderation needs to be part of a project engineering planning, and not separate. This pocket moderation guide is a good way to kickoff conversations, the baseline moderation tool is an example of how you might track progress and of course CHAOSS has many great metrics in this area. Overall as an ecosystem, we need to share more about how we moderate; How do you tackle these challenges?