Can coding assistants make a TPMs life easier? 9 Hours of Misery and Hope

The Technical Program Manager role require a strong mix of business skills including: business analyst, software development, project and people managers. We hold a lot together, but also depend on engineers and designers to prototype and build solutions. This means we're often waiting for the time of those folks or trying to build our own prototypes in the non-existent gaps in our schedule.

Coding assistants promise to break us out of that cycle, so I decided to test the hypothesis.

The Challenge

Last week, I wrote a blog post, focused on empowering employees to build better business cases for investment in open source.

I know that the 'Investment Framework as big markdown file is a lot to process - it has a ton of business logical, requires technical knowledge of metrics and data and expects the user to have experience writing executive communications (business case). The perfect candidate for this experiment!

I decided to see if GitHub Copilot with Claude 4 could help me build a python app, deployable to Hugging Face spaces (using Gradio for UI) for others to test. How hard could it be?

The Initial Vision

I gave Copilot the following commands:

- Create a chatbot from this markdown file: https://github.com/microsoft/OSPO/blob/main/learning_resources/using-oss/investment-framework.md

- Map motivations to CHAOSS metrics (https://chaoss.community/kbtopic/all-metrics/)

- Prompt users for a GitHub repository URL and let them select relevant CHAOSS metrics

- Get data for metrics from GitHub API, GraphQL or by asking the user if neither of those return data.

- Use strategic language when building business case (output)

Early Success (The Honeymoon Phase)

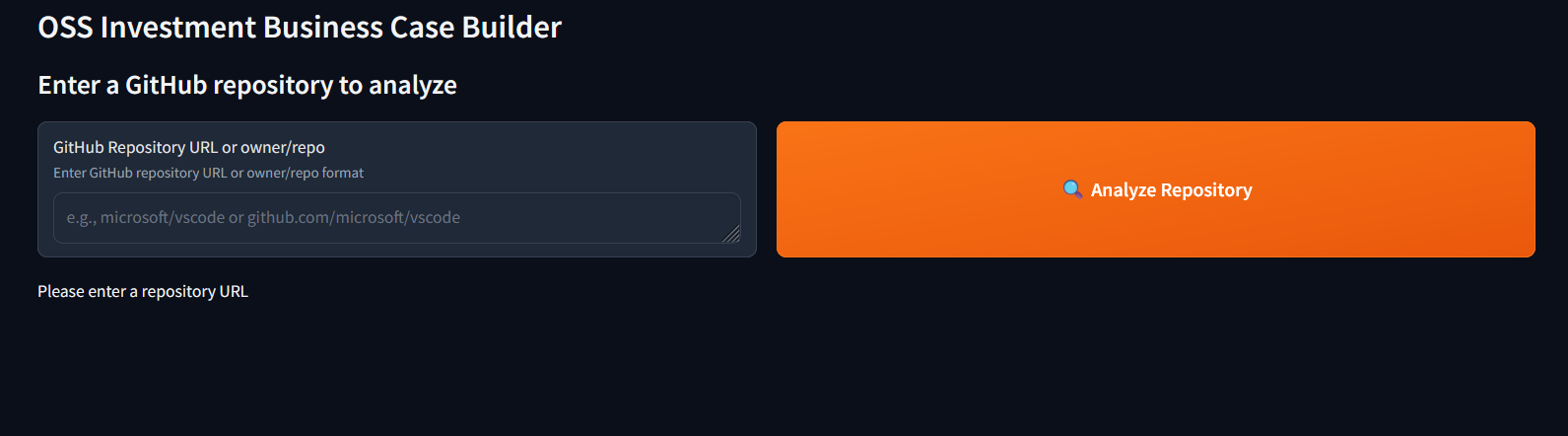

Hour 1-2: Copilot created a solid Python (chatbot) command-line script (with data I assumed was correct). Feeling greedy for something more user-friendly, I asked for a Gradio app I could publish on Hugging Face Spaces.

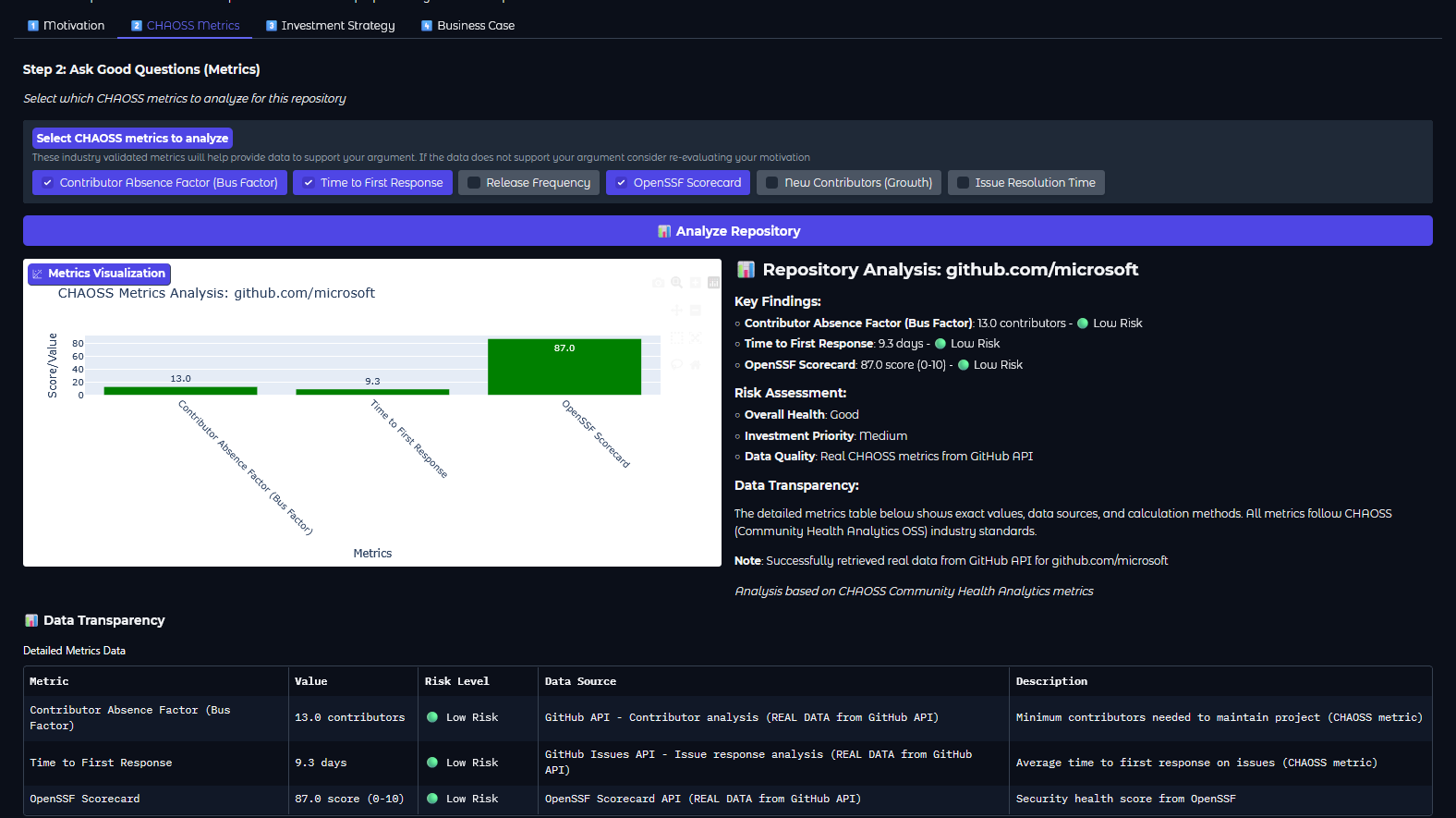

First Impressions: Pretty impressive! The UI looked good, even if some graphics were irrelevant or wrong. It even showed data! This gave me hope that I was nearly done 😅.

Things Got Complicated

My testing soon uncovered that the data wasn't real, and the metrics calculations were based on its own interpretation rather than CHAOSS (although it had used the correct naming).

Hours 3-5: Clearly directing Copilot to use the GitHub API specific data, how to obtain it, how to calculate specific metrics, as well as how to write a proper business case (it defaulted to more of a computer-printout of fields despite original direction). It seemed to take longer and longer with each prompt, with it fixing things I didn't even know or understand needed fixing...

Disappearing Act

The most alarming moment: while working on bugs in the data visualization, Copilot suddenly removed ALL other content from the app. I was left staring at a single button.

Copilot insisted that this was how we "focus on the problem", which I decided to trust... In a way it was taking over here, and I lacked the right prompts to reset the relationship with me as director.

Version Control Chaos

Copilot kept creating new versions with names (which reminded me of my desktop: "backup of backup of backup"😅 ) clearly needing its own versioning strategy. No naming logic or versions, very random.

Once we had solved the focused problem - I asked it to 'bring back' the other parts of the app, which it turns out - it forgot all about. 😮

The Breaking Point

Hour 6: Copilot hit an error and wouldn't work at all. I took the error message to Claude Desktop for help—it gave me a detailed technical explanation, but that felt like a rabbit hole.

Solution: Started a fresh chat and switched from the latest Claude model to the previous version. But this meant starting over, including dealing with Copilot's confusion about the previous version's file naming conventions 😔. Back on Track

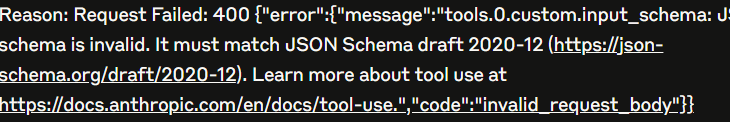

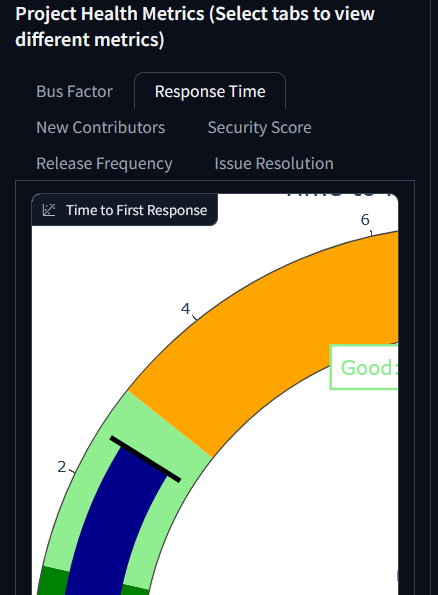

Hours 7-9: Eventually got visualizations working on separate tabs, though sizing was still off. . We mostly resolved the data issues, resulting in a functional (if imperfect) prototype. Some of the tabs were still blank, but I wasn't sure why (I wish it prompted me for more direction where it ran into walls).

[Image: Final prototype showing CHAOSS metrics visualization zoomed in weird]

Final Result: You can find the full prototype in this Hugging Face Space. You need to fork and add a GitHub token to test.

Key Lessons Learned

1. Start Simple and Commit Often

Don't feed a giant logic chart to AI and expect refinement to go smoothly—even if it seems promising at first.

Best Practice: Get one area of logic working, commit the code, then move to the next step. Test, test test. A project plan is still important!

2. Set Clear Boundaries

I learned to add specific directions as we progressed:

- "Please don't change code without checking with me first"

- "Don't assume something is a bug unless I tell you it is—ask first"

- "Feel free to push back if you disagree; don't just tell me I'm right"

- "Remind me to commit versions that work before we try new things"

- "Please stay with standards set for the technology and architecture we are building for (Python, and Hugging Face Spaces).

3. Handle Security from the Start

AI doesn't always follow security best practices by default:

- "Ensure all secrets/tokens go in .env file"

- "Make sure .env is in .gitignore"

- "Add proper licensing and attribution"

- "Add a the contributors covenant 3.0 reporting to emma at sunnydeveloper.com"

Protect your business logic

Copilot wouldn't help but inject its own advice, and assessment of data.

- "Do not inject new steps, options or interpretations of metrics than what is directed to by the original document, or by me".

- "Please tell me if you are using fake data"

Time Breakdown Reality Check

9 hours total breakdown:

- Hours 1-2: Initial success, false confidence

- Hours 3-5: Refinement and growing complications

- Hour 6: Complete breakdown and reset

- Hours 7-9: Rebuilding and achieving "good enough for prototype (but not working as intended"

Summary

This experience filled me with hope for the future(TPM roles are going to be more important than ever, especially if such assistants unblock us from waiting on others) but caution for the present (timebox your efforts) and assert guardrails earlyh and often.

The code was created with assistance from GitHub Copilot and Claude. Available for consulting sessions, or sponsor me on GitHub to support writing like this.